Coletrain

New Member

- Messages

- 2

I usually just lurk this forum whenever I have problems with my computer, but this time I felt the need to share a solution to a problem that I couldn't find any answers to with my google-fu.

(TLDR at the bottom)

So I have a simple mirrored 2-drive (2x 4TB Seagate Barracudas) storage pool using Windows Storage Spaces which I use for storing all my media and documents. Storage Solutions was telling me I was low on capacity so I ended up going through all my media deleting anything that wasn't at least 3mbps quality. I had deleted at least 1TB worth of media and had usage on the volume down to 1.51TB (in windows explorer). This is when I noticed the huge discrepancy between what Storage solutions was saying I had left for capacity and what I knew I should have had. It was telling me I was using 6.11 TB of 7.27 TB Pool capacity, which of course makes no sense as even when accounting for lost capacity from resiliency & partial stripe utilization it should be a maximum of about 3.1GB~.

I tried emptying the recycle bin, defragging/consolidating the partition, and minimizing the Volume Shadow Copy capacity. None of this helped and to me it just felt like for some reason Windows wasn't "giving back" the capacity or was otherwise somehow failing to notice the free space and recovering it.

As far as the solution well it's more of a quick fix and it doesn't actually prevent the problem from happening or leave more than a clue towards explaining why it happens in the first place. What I ended up doing was creating dummy files on the volume to max out the capacity and deleting them to see if it would trigger some sort of capacity recovery, and it actually worked.

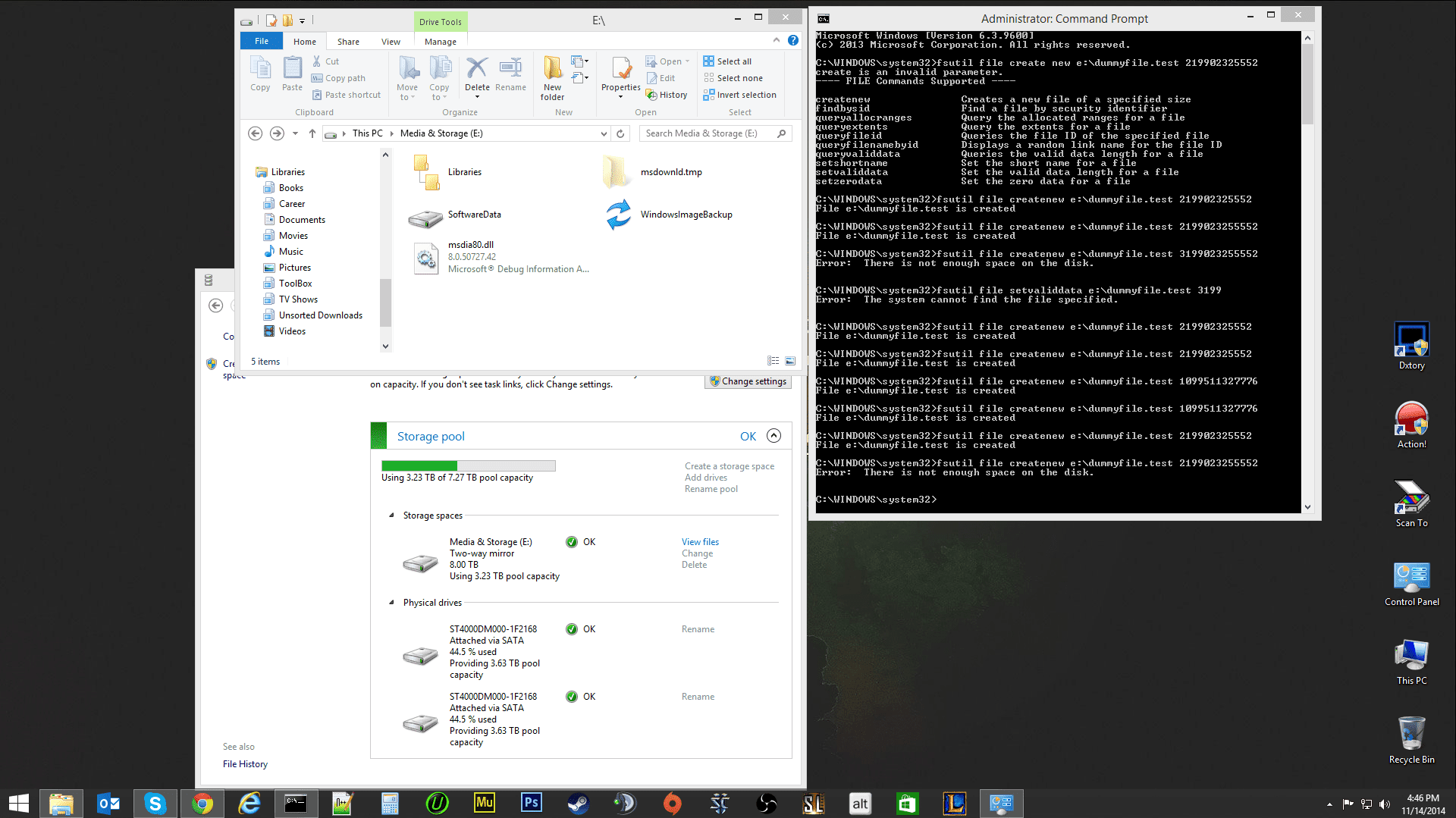

I used this method to create dummy files:

Quickly Generate Large Test Files in Windows

I started by creating a 1TB file (fsutil file createnew e:\dummyfile.test 1099511627776) which maxed out the pool capacity. I then deleted the file and the pool was now reading "Using 5.45 TB of 7.27 TB pool capacity".

I then created a 2TB file (fsutil file createnew e:\dummyfile.test 2199023255552) which again maxed out the pool capacity, and after deleting the file the capacity now reads as "Using 3.47 TB of 7.27 TB pool capacity".

I still felt like there was little bit more capacity to be found so I then created a 3TB file which of course returned the response "Error: There is not enough space on the disk". This makes sense considering it would have been 6TB of data including resiliency in addition to the 1.5TB of actual data I had on the volume. Funny enough I then had to start all over again as the capacity was reading 7.27 of 7.27 TB but I had no file to delete this time.

From here I created a 2TB file and deleted it, which brought me down to the same 5.45 TB reading I got when I had created a 1TB on my first try. I then tried to create another 2TB file which of course went beyond the capacity, except this time the storage pool seemed to register the fact that the 2TB file was never created (as opposing to staying capped at the 7.27TB usage reading after the previous previous attempt at a 3TB file creation).

The storage pool is now reading 3.23 TB of 7.27 TB pool capacity which seems about what I thought it should have been! Keep in mind I probably have a lot of 256MB stripes not being fully utilized because I store a lot of small files/documents on this drive, and this would account for the extra 210GB of space still "missing".

Edit: Ok so I just optimized/defragmented the volume again and my space efficiency jumped from 93% to 97%. Storage Solutions is now reading 3.09 TB of 7.27 TB usage. Optimizing wasn't doing anything before! This is great but also kind of silly...

TLDR: My 2x 4TB mirrored Storage Spaces pool was reading 6.11 TB/7.27 TB usage even though I only had 1.51 TB of data. Creating & deleting dummy files with the fsutil cmd and maxing out the capacity on the volume seems to smack windows upside the head hard enough to register the correct capacity usage of 3.09 TB / 7.27 TB.

My question for everyone else is WTF is going on here? It shouldn't behave like this right? I feel like I've found some kind of drive capacity black hole in Storage Solutions.

Last edited:

My Computer

System One

-

- OS

- Windows 8.1

- Computer type

- PC/Desktop

- System Manufacturer/Model

- Custom

- CPU

- i7 4930k

- Motherboard

- Sabertooth X79

- Memory

- 32GB

- Graphics Card(s)

- 4GB GTX 770

- Monitor(s) Displays

- ASUS VG248QE (primary) / HP 2511x (secondary)

- Screen Resolution

- 1080

- Hard Drives

- 128GB Samsung PRO (OS),

500GB Samsung EVO (Programs/Games),

2x 4TB Barracuda mirrored with Storage Solutions (Documents/Media).

- PSU

- AX1200i

- Case

- Caselabs Merlin SM8

- Cooling

- Custom loop

- Keyboard

- Corsair K70

- Mouse

- Logitech G502 / Artisan Shiden mousepad

- Internet Speed

- Telus 50/10

- Browser

- Chrome